Thursday, March 24, 2011

Does solar activity have to KEEP going up to cause warming? Mike Lockwood responds

Posted at WUWT a couple of days ago. That original post included some discursions that I have decided are better placed at the end with the other addenda, so this is the optimized version.

My first post on this subject remarked on the number of scientists who assert that late 20th century global warming cannot have been driven by the sun because solar activity was not trending upwards after 1950, even though it remained at peak levels. To follow up, I asked a dozen of these scientists whether solar activity has to KEEP going up to cause warming?

Wouldn't that be like saying you can't heat a pot of water by turning the flame to maximum and leaving it there, that you have to turn the heat up gradually to get warming?

My email suggested that these scientists (more than half of whom are solar scientists) must be implicitly assuming that by 1980 or so, ocean temperatures had already equilibrated to the 20th century's high level of solar activity. Then they would be right. Continued forcing at the same average level would not cause any additional warming and any fall off in forcing would have a cooling effect. But without this assumption—if equilibrium had not yet been reached—then continued high levels of solar activity would cause continued warming.

Pretty basic, but none of these folks had even mentioned equilibration. If they were indeed assuming that equilibrium had been reached, and this was the grounds on which they were dismissing a solar explanation for late 20th century warming, then I urged that this assumption needed to be made explicit, and the arguments for it laid out.

I have received a half dozen responses so far, all of them very gracious and quite interesting. The short answer is yes, respondents are for the most part defending (and hence at least implicitly acknowledging) the assumption that equilibration is rapid and should have been reached prior to the most recent warming. So that's good. We can start talking about the actual grounds on which so many scientists are dismissing a solar explanation.

Do their arguments for rapid equilibration hold up? Here the short answer is no, and you might be surprised to learn who pulled out all the stops to demolish the rapid equilibration theory.

From "almost immediately" to "20 years"

That is the range of estimates I have been getting for the time it takes the ocean temperature gradient to equilibrate in response to a change in forcing. Prima facie, this seems awfully fast, given how the planet spent the last 300+ years emerging from the Little Ice Age. Even in the bottom of the little freezer there were never more than 20 years of "stored cold"? What is their evidence?

First up is Mike Lockwood, Professor of Space Environment Physics at the University of Reading. Here is the quote from Mike Lockwood and Claus Fröhlich that I was responding to (from their 2007 paper, "Recent oppositely directed trends in solar climate forcings and the global mean surface air temperature"):

The Team springs into action, ... on the side of a slow adjustment to equilibrium?

Professor Lockwood cites the short "time constant" estimated by Stephen Schwartz, adding that "almost all estimates have been in the 1-10 year range," and indeed, it seems that rapid equilibration was a pretty popular view just a couple of years ago, until Schwartz came along and tied equilibration time to climate sensitivity. Schwartz 2007 is actually the beginning of the end for the rapid equilibration view. Behold the awesome number-crunching, theory-constructing power of The Team when their agenda is at stake.

The CO2 explanation for late 20th century warming depends on climate being highly sensitive to changes in radiative forcing. The direct warming effect of CO2 is known to be small, so it must be multiplied up by feedback effects (climate sensitivity) if it is to account for any significant temperature change. Schwartz shows that in a simple energy balance model, rapid equilibration implies a low climate sensitivity. Thus his estimate of a very short time constant was dangerously contrarian, prompting a mini-Manhattan Project from the consensus scientists, with the result that Schwartz' short time constant estimate has now been quite thoroughly shredded, all on the basis of what appears to be perfectly good science.

Too bad nobody told our solar scientists that the rapid equilibrium theory has been hunted and sunk like the Bismarck. ("Good times, good times," as Phil Hartman would say.)

Schwartz' model

Schwartz' 2007 paper introduced new way of estimating climate sensitivity. He showed that when the climate system is represented by the simplest possible energy balance model, the following relationship should hold:

Time to equilibrium will also be longer the larger the heat capacity of the system. The surface of the planet has to get hot enough to push enough longwave radiation through the atmosphere to balance the increase in sunlight. The more energy gets absorbed into the oceans, the longer it takes for the surface to reach that necessary temperature.

τ = Cλ-1 can be rewritten as λ-1 = τ /C, so all Schwartz needs are estimates for τ and C and he has an estimate for climate sensitivity.

Here too Schwartz keeps things as simple as possible. In estimating C, he treats the oceans as a single heat reservoir. Deeper ocean depths participate less in the absorbing and releasing of heat than shallower layers, but all are assumed to move directly together. There is no time-consuming process of heat transfer from upper layers to lower layers.

For the time constant, Schwartz assumes that changes in GMAST (the Global Mean Atmospheric Surface Temperature) can be regarded as Brownian motion, subject to Einstein's Fluctuation Dissipation Theorem. In other words, he is assuming that GMAST is "subject to random perturbations," but otherwise "behaves as a first-order Markov or autoregressive process, for which a quantity is assumed to decay to its mean value with time constant τ."

To find τ, Schwartz examines the autocorrelation of the temperature time series and looks to see how long a lag there is before the autocorrelation stops being positive. This time to decorrelation is the time constant.

The Team's critique

Given Schwartz' time constant estimate of 4-6 years:

Part of the discrepancy could be from Schwartz' use of a lower heat capacity estimate than is used in the AR4 model, but Foster et al. judge that:

Daniel Kirk-Davidoff's two heat-reservoir model

This is interesting stuff. Kirk-Davidoff finds that adding a second weakly coupled heat reservoir changes the behavior of the energy balance model dramatically. The first layer of the ocean responds quickly to any forcing, then over a much longer time period, this upper layer warms the next ocean layer until equilibrium is reached. This elaboration seems necessary as a matter of realism and it could well be taken further (by including further ocean depths, and by breaking the layers down into sub-layers).

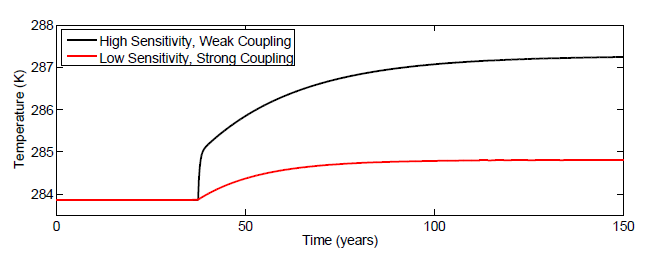

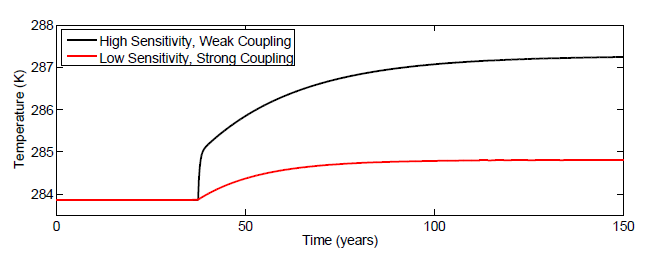

K-D shows that when Schwartz' method for estimating the time constant is applied to data generated by a two heat-reservoir model it latches onto the rapid temperature response of the upper ocean layer (at least when used with such a short time series as Schwartz employs). As a result, it shows a short time constant even when the coupled equilibration process is quite slow:

"Weak coupling" here refers to the two heat reservoir model. "Strong coupling" is the one reservoir model.

The initial jump up in surface temperatures in the two reservoir model corresponds to the rapid warming of the upper ocean layer, which in the particular model depicted here then warms the next ocean layer for another hundred plus years, with surface temperatures eventually settling down to a temperature increase more than twice the size of the initial spike.

This bit of realism changes everything. Consider the implications of the two heat reservoir model for the main item of correlative evidence that Schwartz put forward in support of his short time constant finding.

The short recovery time from volcanic cooling

Here is Schwartz' summary of the volcanic evidence:

Gavin Schmidt weighs in

Gavin Schmidt recently had occasion to comment on the time to equilibrium:

Eventually, good total ocean heat content data will reveal near exact timing and magnitude for energy flows in and out of the oceans, allowing us to resolve which candidate forcings actually force, and how strongly. We can also look forward to enough sounding data to directly observe energy transfer between different ocean depths over time, revealing exactly how equilibration proceeds in response to forcing. But for now, time to equilibration would seem to be a wide open question.

Climate sensitivity

This also leaves climate sensitivity as an open question, at least as estimated by heat capacity and equilibration speed. Roy Spencer noted this in support of his more direct method of estimating climate sensitivity, holding that the utility of the fluctuation dissipation approach:

Solar warming is being improperly dismissed

For solar warming theory, the implications of equilibration speed being an open question are clear. We have a host of climatologists and solar scientists who have been dismissing a solar explanation for late 20th century warming on the strength of a short-time-to-equilibrium assumption that is not supported by the evidence. Thus a solar explanation remains viable and should be given much more attention, including much more weight in predictions of where global temperatures are headed.

If 20th century warming was caused primarily the 20th century's 80 year grand maximum of solar activity then it was not caused by CO2, which must be relatively powerless: little able either to cause future warming, or to mitigate the global cooling that the present fall off in solar activity portends. The planet likely sits on the cusp of another Little Ice Age. If we unplug the modern world in an unscientific war against CO2 our grandchildren will not thank us.

Addenda

There is more to Lockwood's response, and more that I want to say about it, but this post is already quite long, so for anyone who wants to comment, please don't feel that due diligence requires reading this additional material.

Addendum 1: A slow equilibration process does not necessarily support solar-warming theory and a fast equilibrium process does not necessarily support the CO2-warming theory

In my enthusiasm to pitch the importance of being explicit about the role of equilibrium, my email suggested that it could provide a test of which theory is right. If these scientists could show that the temperature gradient of the oceans had reached equilibrium by 1970, or 1986, then their grounds for dismissing a solar explanation for late-20th century warming would be upheld. If equilibrium was not reached, that would support the solar warming theory.

Well, to some extent maybe, but equilibrium alone doesn't really resolve the issue. This was the second topic of Professor Lockwood's response:

Maybe the spectrum shift that accompanies solar magnetic activity has a climate-impacting effect on atmospheric chemistry, or it could be the solar wind, deflecting Galactic Cosmic Radiation from seeding cloud formation. That is Svensmark's GCR-cloud theory. Noting that TSI alone could not have caused 20th century warming does nothing to rebut these solar warming theories.

Where I think Svensmark would agree with Lockwood is in rejecting my suggestion that knowing the state of equilibrium in 1970 or 1980 would tell us which theory is right. His own reply to Lockwood claims that if ocean surface temperature oscillations are controlled for, then the surface temperature tracks the ups and downs in the solar cycle to a tee. I asked Doctor Svensmark if he wanted to chime in and did not get a reply, but there's a good chance he would fall in the short-path-to-equilibrium camp. As the two heat-reservoir model shows, rapid surface temperature responses to forcing does not imply rapid equilibration, but a lot of people are reading it that way.

Also, it is possible that time to equilibrium is slow, but that the 300 year climb out of the Little Ice Age just did happen to reach equilibrium in the late 20th century. Thus I have to agree with Mike that time-to-equilibrium is not in itself determinative. It is one piece of the puzzle. What makes equilibrium particularly needful of attention is how it has been neglected, with so many scientists making crucial assumptions about equilibrium without being explicit about it, or making any argument for those assumptions.

Addendum 2: collection of quotes from scientists

Below is a list of the dozen scientists I emailed, together with quotes where they dismiss a solar explanation for recent warming on grounds that solar activity was not rising, with no qualification about whether equilibrium had been reached. These are just the one's I happened to bookmark over the last few years. Undoubtedly there are many more. It's epidemic!

Most of these folks have responded, so I'll be posting at least 3 more follow-ups as I can get to them.

Professors Usoskin, Schuessler, Solanki and Mursula (2005):

Addendum 3: How little Lockwood's own position is at odds with a solar explanation for late 20th century warming

Suppose the solar activity peak was not in 1985, as Lockwood and Frohlic claim, but several years later, as a straightforward reading of the data suggests. (As documented in Part 1, solar cycle 22, which began in 1986, was more active than cycle 21 by pretty much every measure.) If the solar activity peak shifts five years then instead of predicting peak GMAST by 1995, Mike's temperature response formula says peak GMAST should have occurred by 2000, which is pretty close to when it did occur.

Then there is the lack of warming since 2000, which is fully compatible with a solar explanation for late 20th century warming but is seriously at odds with the CO2 theory. Of course 10 or 15 years is not enough data to prove or disprove either theory, but the episode that is held to require a CO2 explanation is even slighter. The post 1970's warming that is said to be incompatible with a solar explanation didn't show a clear temperature signal until 97 or 98:

By this standard, the subsequent decade of no warming should be seen as even stronger evidence that climate is being driven by the sun, and the quicker the oceans equilibrate, the less room there is for CO2 driven warming to be hidden by ocean damping. Maybe it is time for another update: "Recent samely directed solar climate forcings and global temperature."

Not samely directed trends, because the equilibration mechanism has to be accounted, but a leveling off of surface temperatures is just what a solar-driven climate should display when solar activity plummets. This most recent data is a big deal. It's hard to justify reading so much into the very late 20th century step-up in temperature while ignoring the 21st century's lack of warming.

Addendum 4: Errant dicta from Lockwood and Fröhlich

Professor Lockwood says that his updated paper with Claus Fröhlich ("Recent Samely Directed Trends II") addresses the issue of equilibrium. Here is the second paragraph of their updated paper:

Still, this is only what a lawyer would call "dicta": the parts of an opinion that don't carry the weight of the decision, but simply comment on roads not taken. Lockwood and Fröhlich proceed to argue that time-to-equilibrium is short, so their assertions about the case where time-to-equilibrium is long are dicta, but it does raise the question of whether they grasp the implications that time-to-equilibrium actually has for their argument.

Addendum 5: an economist's critique of Schwartz

Start with Schwartz' assumption that forcings are random. Not only does solar activity follow a semi-regular 11 year cycle, but Schwartz only looks at GMAST going back to 1880, a period over which solar activity was semi-steadily rising, as was CO2. Certainly the CO2 increase has been systematic, and solar fluctuations may well be too, so the forcings are not all that random it seems.

I'm not a physicist so I can't say how critical the randomness assumption is to Schwartz' model, but I have a more general problem with the randomness assumption. My background is economics, which is all about not throwing away information, and the randomness assumption throws away information in spades.

By assuming that perturbations are random Schwartz is setting aside everything we can say about the actual time-sequence of forcings. We know, for instance, that the 1991-93 temperature dip coincided with a powerful forcing: the Mount Pinatubo eruption. This allows us to distinguish at least most of this dip as a perturbation rather than a lapse to equilibrium, and this can be done systematically. By estimating how volcanism has affected temperature over the historical record, we can with some effectiveness control for its effects over the entire record. Similarly with other possible forcings. To the extent that their effects are discernable in the temperature record they can be controlled for.

Making use of this partial ability to distinguish perturbations from lapses would give a better picture of the lapse to equilibrium and a better estimate of the lapse rate. Thus even if Schwartz's estimation scheme could be legitimate (maybe given a long enough GMAST record?) the way that it throws away information means that it certainly cannot be efficient. Schwartz's un-economic scheme cannot produce a "best estimate," and probably should not be referenced as such.

My first post on this subject remarked on the number of scientists who assert that late 20th century global warming cannot have been driven by the sun because solar activity was not trending upwards after 1950, even though it remained at peak levels. To follow up, I asked a dozen of these scientists whether solar activity has to KEEP going up to cause warming?

Wouldn't that be like saying you can't heat a pot of water by turning the flame to maximum and leaving it there, that you have to turn the heat up gradually to get warming?

My email suggested that these scientists (more than half of whom are solar scientists) must be implicitly assuming that by 1980 or so, ocean temperatures had already equilibrated to the 20th century's high level of solar activity. Then they would be right. Continued forcing at the same average level would not cause any additional warming and any fall off in forcing would have a cooling effect. But without this assumption—if equilibrium had not yet been reached—then continued high levels of solar activity would cause continued warming.

Pretty basic, but none of these folks had even mentioned equilibration. If they were indeed assuming that equilibrium had been reached, and this was the grounds on which they were dismissing a solar explanation for late 20th century warming, then I urged that this assumption needed to be made explicit, and the arguments for it laid out.

I have received a half dozen responses so far, all of them very gracious and quite interesting. The short answer is yes, respondents are for the most part defending (and hence at least implicitly acknowledging) the assumption that equilibration is rapid and should have been reached prior to the most recent warming. So that's good. We can start talking about the actual grounds on which so many scientists are dismissing a solar explanation.

Do their arguments for rapid equilibration hold up? Here the short answer is no, and you might be surprised to learn who pulled out all the stops to demolish the rapid equilibration theory.

From "almost immediately" to "20 years"

That is the range of estimates I have been getting for the time it takes the ocean temperature gradient to equilibrate in response to a change in forcing. Prima facie, this seems awfully fast, given how the planet spent the last 300+ years emerging from the Little Ice Age. Even in the bottom of the little freezer there were never more than 20 years of "stored cold"? What is their evidence?

First up is Mike Lockwood, Professor of Space Environment Physics at the University of Reading. Here is the quote from Mike Lockwood and Claus Fröhlich that I was responding to (from their 2007 paper, "Recent oppositely directed trends in solar climate forcings and the global mean surface air temperature"):

There is considerable evidence for solar influence on the Earth's pre-industrial climate and the Sun may well have been a factor in post-industrial climate change in the first half of the last century. Here we show that over the past 20 years, all the trends in the Sun that could have had an influence on the Earth's climate have been in the opposite direction to that required to explain the observed rise in global mean temperatures.The estimate in this paper is that solar activity peaked in 1985. Would that really mean the next decade of near-peak solar activity couldn't cause warming? Surely they were assuming that equilibrium temperatures had already been reached. Here is the main part of Mike's response:

Hi Alec,This is a significant update on Lockwood and Fröhlich's 2007 paper, where it was suggested that temperatures should have peaked when solar activity peaked. Now the lagged temperature response of the oceans is front and center, and Professor Lockwood is claiming that equilibrium comes quickly. When there is a change in forcing, the part of the ocean that does significant warming should be close to done with its temperature response within 10 years.

Thank you for your e-mail and you raise what I agree is a very interesting and complex point. In the case of myself and Claus Froehlich, we did address this issue in a follow-up to the paper of ours that you cite, and I attach that paper.

One has to remember that two parts of the same body can be in good thermal contact but not had time to reach an equilibrium. For example I could take a blow torch to one panel of the hull of a ship and make it glow red hot but I don't have to make the whole ship glow red hot to get the one panel hot. The point is that the time constant to heat something up depends on its thermal heat capacity and that of one panel is much less than that of the whole ship so I can heat it up and cool it down without an detectable effect on the rest of the ship. Global warming is rather like this. We are concerned with the temperature of the Earth’s surface air temperature which is a layer with a tiny thermal heat capacity and time constant compared to the deep oceans. So the surface can heat up without the deep oceans responding. So no we don’t assume Earth surface is in an equilibrium with its oceans (because it isn't).

So the deep oceans are not taking part in global warming and are not relevant but obviously the surface layer of the oceans is. The right question to ask is, "how deep into the oceans do centennial temperature variations penetrate so that we have to consider them to be part of the thermal time constant of the surface?" That sets the ‘effective’ heat capacity and time constant of the surface layer we are concerned about. We know there are phenomena like El-Nino/La Nina where deeper water upwells to influence the surface temperature. So what depth of ocean is relevant to century scale changes in GMAST [Global Mean Air Surface Temperature] and what smoothing time constant does this correspond to?

......

In the attached paper, we cite a paper by Schwartz (2007) that discusses and quantifies the heat capacity of the oceans relevant to GMAST changes and so what the relevant response time constant is. It is a paper that has attracted some criticism but I think it is a good statement of the issues even if the numbers may not always be right. In a subsequent reply to comments he arrives at a time constant of 10 years. Almost all estimates have been in the 1-10 year range.

In the attached paper we looked at the effect of response time constants between 1-10 years and showed that they cannot be used to fit the solar data to the observed GMAST rise. Put simply. The peak solar activity in 1985 would have caused peak GMAST before 1995 if the solar change was the cause of the GMAST rise before 1985.

The Team springs into action, ... on the side of a slow adjustment to equilibrium?

Professor Lockwood cites the short "time constant" estimated by Stephen Schwartz, adding that "almost all estimates have been in the 1-10 year range," and indeed, it seems that rapid equilibration was a pretty popular view just a couple of years ago, until Schwartz came along and tied equilibration time to climate sensitivity. Schwartz 2007 is actually the beginning of the end for the rapid equilibration view. Behold the awesome number-crunching, theory-constructing power of The Team when their agenda is at stake.

The CO2 explanation for late 20th century warming depends on climate being highly sensitive to changes in radiative forcing. The direct warming effect of CO2 is known to be small, so it must be multiplied up by feedback effects (climate sensitivity) if it is to account for any significant temperature change. Schwartz shows that in a simple energy balance model, rapid equilibration implies a low climate sensitivity. Thus his estimate of a very short time constant was dangerously contrarian, prompting a mini-Manhattan Project from the consensus scientists, with the result that Schwartz' short time constant estimate has now been quite thoroughly shredded, all on the basis of what appears to be perfectly good science.

Too bad nobody told our solar scientists that the rapid equilibrium theory has been hunted and sunk like the Bismarck. ("Good times, good times," as Phil Hartman would say.)

Schwartz' model

Schwartz' 2007 paper introduced new way of estimating climate sensitivity. He showed that when the climate system is represented by the simplest possible energy balance model, the following relationship should hold:

τ = Cλ-1 whereThe intuition here is pretty simple (via Kirk-Davidoff 2009, section 1.1 ). A high climate sensitivity results when there are system feedbacks that block heat from escaping. This escape-blocking lengthens the time to equilibrium. Suppose there is a step-up in solar insolation. The more the heat inside the system is blocked from escaping, the more the heat content of the system has to rise before the outgoing longwave radiation will come into energy balance with the incoming shortwave, and this additional heat increase takes time.

τ is the time constant of the climate system (a measure of time to equilibrium); C is the heat capacity of the system; and λ-1 is climate sensitivity

Time to equilibrium will also be longer the larger the heat capacity of the system. The surface of the planet has to get hot enough to push enough longwave radiation through the atmosphere to balance the increase in sunlight. The more energy gets absorbed into the oceans, the longer it takes for the surface to reach that necessary temperature.

τ = Cλ-1 can be rewritten as λ-1 = τ /C, so all Schwartz needs are estimates for τ and C and he has an estimate for climate sensitivity.

Here too Schwartz keeps things as simple as possible. In estimating C, he treats the oceans as a single heat reservoir. Deeper ocean depths participate less in the absorbing and releasing of heat than shallower layers, but all are assumed to move directly together. There is no time-consuming process of heat transfer from upper layers to lower layers.

For the time constant, Schwartz assumes that changes in GMAST (the Global Mean Atmospheric Surface Temperature) can be regarded as Brownian motion, subject to Einstein's Fluctuation Dissipation Theorem. In other words, he is assuming that GMAST is "subject to random perturbations," but otherwise "behaves as a first-order Markov or autoregressive process, for which a quantity is assumed to decay to its mean value with time constant τ."

To find τ, Schwartz examines the autocorrelation of the temperature time series and looks to see how long a lag there is before the autocorrelation stops being positive. This time to decorrelation is the time constant.

The Team's critique

Given Schwartz' time constant estimate of 4-6 years:

The resultant equilibrium climate sensitivity, 0.30 ± 0.14 K/(W m-2), corresponds to an equilibrium temperature increase for doubled CO2 of 1.1 ± 0.5 K. ...In contrast:

The present [IPCC 2007] estimate of Earth's equilibrium climate sensitivity, expressed as the increase in global mean surface temperature for doubled CO2, is [2 to 4.5].These results get critiqued by Foster, Annan, Schmidt and Mann on a variety of theoretical grounds (like the iffyness of the randomness assumption), but their main response is to apply Schwartz' estimation scheme to runs of their own AR4 model under a variety of different forcing assumptions. Their model has a known climate sensitivity of 2.4, yet the sensitivity estimates produced by Schwartz' scheme average well below even the low estimate that he got from the actual GMAST data, suggesting that an actual sensitivity substantially above 2.4 would still be consistent with Schwartz' results.

Part of the discrepancy could be from Schwartz' use of a lower heat capacity estimate than is used in the AR4 model, but Foster et al. judge that:

... the estimated time constants appear to be the greater problem with this analysis.The AR4 model has known equilibration properties and "takes a number of decades to equilibrate after a change in external forcing," yet when Schwartz' method for estimating speed of equilibration is applied to model-generated data, it estimates the same minimal time constant as it does for GMAST:

Hence this time scale analysis method does not appear to correctly diagnose the properties of the model.There's more, but you get the gist. It's not that Schwartz' basic approach isn't sensible. It's just the hyper-simplification of his model that makes this first attempt unrealistic. Others have since made significant progress in adding realism, in particular, by treating the different ocean levels as separate heat reservoirs with a process of energy transport between them.

Daniel Kirk-Davidoff's two heat-reservoir model

This is interesting stuff. Kirk-Davidoff finds that adding a second weakly coupled heat reservoir changes the behavior of the energy balance model dramatically. The first layer of the ocean responds quickly to any forcing, then over a much longer time period, this upper layer warms the next ocean layer until equilibrium is reached. This elaboration seems necessary as a matter of realism and it could well be taken further (by including further ocean depths, and by breaking the layers down into sub-layers).

K-D shows that when Schwartz' method for estimating the time constant is applied to data generated by a two heat-reservoir model it latches onto the rapid temperature response of the upper ocean layer (at least when used with such a short time series as Schwartz employs). As a result, it shows a short time constant even when the coupled equilibration process is quite slow:

Thus, the low heat capacity of the surface layer, which would [be] quite irrelevant to the response of the model to slowly increasing climate forcing, tricks the analysis method into predicting a small decorrelation time scale, and a small climate sensitivity, because of the short length of the observed time series. Only with a longer time series would the long memory of the system be revealed.K-D says the time series would have to be:

[S]everal times longer than a model would require to come to equilibrium with a step-change in forcing.And how long is that? Here K-D graphs temperature equilibration in response to a step-up in solar insolation for a couple different model assumptions:

"Weak coupling" here refers to the two heat reservoir model. "Strong coupling" is the one reservoir model.

The initial jump up in surface temperatures in the two reservoir model corresponds to the rapid warming of the upper ocean layer, which in the particular model depicted here then warms the next ocean layer for another hundred plus years, with surface temperatures eventually settling down to a temperature increase more than twice the size of the initial spike.

This bit of realism changes everything. Consider the implications of the two heat reservoir model for the main item of correlative evidence that Schwartz put forward in support of his short time constant finding.

The short recovery time from volcanic cooling

Here is Schwartz' summary of the volcanic evidence:

The view of a short time constant for climate change gains support also from records of widespread change in surface temperature following major volcanic eruptions. Such eruptions abruptly enhance planetary reflectance as a consequence of injection of light-scattering aerosol particles into the stratosphere. A cooling of global proportions in 1816 and 1817 followed the April, 1815, eruption of Mount Tambora in Indonesia. Snow fell in Maine, New Hampshire, Vermont and portions of Massachusetts and New York in June, 1816, and hard frosts were reported in July and August, and crop failures were widespread in North America and Europe – the so-called "year without a summer" (Stommel and Stommel, 1983). More importantly from the perspective of inferring the time constant of the system, recovery ensued in just a few years. From an analysis of the rate of recovery of global mean temperature to baseline conditions between a series of closely spaced volcanic eruptions between 1880 and 1920 Lindzen and Giannitsis [1998] argued that the time constant characterizing this recovery must be short; the range of time constants consistent with the observations was 2 to 7 years, with values at the lower end of the range being more compatible with the observations. A time constant of about 2.6 years is inferred from the transient climate sensitivity and system heat capacity determined by Boer et al. [2007] in coupled climate model simulations of GMST following the Mount Pinatubo eruption. Comparable estimates of the time constant have been inferred in similar analyses by others [e.g., Santer et al., 2001; Wigley et al., 2005].All of which is just what one would expect from the two heat reservoir model. The top ocean layer responds quickly, first to the cooling effect of volcanic aerosols, then to the warming effect of the sun once the aerosols clear. But in the weakly coupled model, this rapid upper-layer response reveals very little about how quickly the full system equilibrates.

Gavin Schmidt weighs in

Gavin Schmidt recently had occasion to comment on the time to equilibrium:

Oceans have such a large heat capacity that it takes decades to hundreds of years for them to equilibrate to a new forcing.This is not an unconsidered remark. Schmidt was one of co-authors of The Team's response to Schwartz. Thus Mike Lockwood's suggestion that "[a]lmost all estimates have been in the 1-10 year range," is at the very least passé. The clearly increased realism of the two reservoir model makes it perfectly plausible that the actual speed of equilibration—especially in response to a long period forcing—could be quite slow.

Eventually, good total ocean heat content data will reveal near exact timing and magnitude for energy flows in and out of the oceans, allowing us to resolve which candidate forcings actually force, and how strongly. We can also look forward to enough sounding data to directly observe energy transfer between different ocean depths over time, revealing exactly how equilibration proceeds in response to forcing. But for now, time to equilibration would seem to be a wide open question.

Climate sensitivity

This also leaves climate sensitivity as an open question, at least as estimated by heat capacity and equilibration speed. Roy Spencer noted this in support of his more direct method of estimating climate sensitivity, holding that the utility of the fluctuation dissipation approach:

... is limited by sensitivity to the assumed heat capacity of the system [e.g., Kirk‐Davidoff, 2009].

The simpler method we analyze here is to regress the TOA [Top Of Atmosphere] radiative variations against the temperature variations.

Solar warming is being improperly dismissed

For solar warming theory, the implications of equilibration speed being an open question are clear. We have a host of climatologists and solar scientists who have been dismissing a solar explanation for late 20th century warming on the strength of a short-time-to-equilibrium assumption that is not supported by the evidence. Thus a solar explanation remains viable and should be given much more attention, including much more weight in predictions of where global temperatures are headed.

If 20th century warming was caused primarily the 20th century's 80 year grand maximum of solar activity then it was not caused by CO2, which must be relatively powerless: little able either to cause future warming, or to mitigate the global cooling that the present fall off in solar activity portends. The planet likely sits on the cusp of another Little Ice Age. If we unplug the modern world in an unscientific war against CO2 our grandchildren will not thank us.

Addenda

There is more to Lockwood's response, and more that I want to say about it, but this post is already quite long, so for anyone who wants to comment, please don't feel that due diligence requires reading this additional material.

Addendum 1: A slow equilibration process does not necessarily support solar-warming theory and a fast equilibrium process does not necessarily support the CO2-warming theory

In my enthusiasm to pitch the importance of being explicit about the role of equilibrium, my email suggested that it could provide a test of which theory is right. If these scientists could show that the temperature gradient of the oceans had reached equilibrium by 1970, or 1986, then their grounds for dismissing a solar explanation for late-20th century warming would be upheld. If equilibrium was not reached, that would support the solar warming theory.

Well, to some extent maybe, but equilibrium alone doesn't really resolve the issue. This was the second topic of Professor Lockwood's response:

Incidentally, one thing I cannot agree on in your e-mail is that the response time is the only thing separating solar and anthropogenic “theories”. Our understanding of greenhouse trapping (by water vapour, CO2, methane etc) predicts the right level of GMAST (without it the GMAST would be -21 degrees) and it also predicts that changing from 280 ppm by volume of CO2 to 360 (which has happened since pre-industrial times) will have caused a GMAST change by a sizeable fraction of a degree – the science of the radiative properties of CO2 are too well understood and verified for that not to be true. (There are utterly false and unscientific statements around on the blogosphere that adding CO2 doesn’t add to the greenhouse trapping – this is completely wrong. It is true of some CO2 absorption lines but not of them all so if you integrate over the spectrum (rather than selecting bits of it!) one finds a radiative forcing rise that matches the observed GMAST rise very well indeed. Our best estimates of the corresponding radiative forcing by solar change are smaller by a factor of at least 10. So solar change is too small to have caused the rise we have seen, greenhouse trapping by extra CO2 is not.I would just disagree with Mike's conclusion. The reason the IPCC fixes the radiative forcing effect of solar variation at less than 1/10th the effect of CO2 (0.12 vs 1.66 W/m^2) is because the only solar effect that the IPCC takes into account is the tiny variation in Total Solar Insolation. TSI, or "the solar constant," varies by one or two tenths of a percent over the solar cycle while other measures of solar activity can vary by an order of magnitude. If 20th century warming was driven by the sun, it pretty much has to have been driven by something other than TSI.

I hope that helps

Mike Lockwood

Maybe the spectrum shift that accompanies solar magnetic activity has a climate-impacting effect on atmospheric chemistry, or it could be the solar wind, deflecting Galactic Cosmic Radiation from seeding cloud formation. That is Svensmark's GCR-cloud theory. Noting that TSI alone could not have caused 20th century warming does nothing to rebut these solar warming theories.

Where I think Svensmark would agree with Lockwood is in rejecting my suggestion that knowing the state of equilibrium in 1970 or 1980 would tell us which theory is right. His own reply to Lockwood claims that if ocean surface temperature oscillations are controlled for, then the surface temperature tracks the ups and downs in the solar cycle to a tee. I asked Doctor Svensmark if he wanted to chime in and did not get a reply, but there's a good chance he would fall in the short-path-to-equilibrium camp. As the two heat-reservoir model shows, rapid surface temperature responses to forcing does not imply rapid equilibration, but a lot of people are reading it that way.

Also, it is possible that time to equilibrium is slow, but that the 300 year climb out of the Little Ice Age just did happen to reach equilibrium in the late 20th century. Thus I have to agree with Mike that time-to-equilibrium is not in itself determinative. It is one piece of the puzzle. What makes equilibrium particularly needful of attention is how it has been neglected, with so many scientists making crucial assumptions about equilibrium without being explicit about it, or making any argument for those assumptions.

Addendum 2: collection of quotes from scientists

Below is a list of the dozen scientists I emailed, together with quotes where they dismiss a solar explanation for recent warming on grounds that solar activity was not rising, with no qualification about whether equilibrium had been reached. These are just the one's I happened to bookmark over the last few years. Undoubtedly there are many more. It's epidemic!

Most of these folks have responded, so I'll be posting at least 3 more follow-ups as I can get to them.

Professors Usoskin, Schuessler, Solanki and Mursula (2005):

The long term trends in solar data and in northern hemisphere temperatures have a correlation coefficient of about 0.7 — .8 at a 94% — 98% confidence level. …Solanki (2007):

Note that the most recent warming, since around 1975, has not been considered in the above correlations. During these last 30 years the total solar irradiance, solar UV irradiance and cosmic ray flux has not shown any significant secular trend, so that at least this most warming episode must have another source.

"Since 1970, the cosmic ray flux has not changed markedly while the global temperature has shown a rapid rise," [Solanki] says. "And that lack of correlation is proof that the Sun doesn't cause the warming we are seeing now."Solanki and Krivova (2003):

Clearly, correlation coefficients provide an indication that the influence of the Sun has been smaller in recent years but cannot be taken on their own to decide whether the Sun could have significantly affected climate, although from Figure 2 it is quite obvious that since roughly 1970 the Earth has warmed rapidly, while the Sun has remained relatively constant.Professors Lockwood and Fröhlich (2007):

There is considerable evidence for solar influence on the Earth's pre-industrial climate and the Sun may well have been a factor in post-industrial climate change in the first half of the last century. Here we show that over the past 20 years, all the trends in the Sun that could have had an influence on the Earth's climate have been in the opposite direction to that required to explain the observed rise in global mean temperatures.Professor Benestad (2004):

Svensmark and others have also argued that recent global warming has been a result of solar activity and reduced cloud cover. Damon and Laut have criticized their hypothesis and argue that the work by both Friis-Christensen and Lassen and Svensmark contain serious flaws. For one thing, it is clear that the GCR does not contain any clear and significant long-term trend (e.g. Fig. 1, but also in papers by Svensmark).Professor Phil Jones (interview with the BBC, February 2010):

And 2005:

A further comparison with the monthly sunspot number, cosmic galactic rays and 10.7 cm absolute radio flux since 1950 gives no indication of a systematic trend in the level of solar activity that can explain the most recent global warming.

Natural influences (from volcanoes and the Sun) over this period [1975-1998] could have contributed to the change over this period. Volcanic influences from the two large eruptions (El Chichon in 1982 and Pinatubo in 1991) would exert a negative influence. Solar influence was about flat over this period. Combining only these two natural influences, therefore, we might have expected some cooling over this period.Dr. Piers Forster (quoted by the BBC, October 2009):

The scientists' main approach was simple: to look at solar output and cosmic ray intensity over the last 30-40 years, and compare those trends with the graph for global average surface temperature.Professor Johannes Feddema (quoted in the Topeka Capital-Journal, September 2009):

And the results were clear. "Warming in the last 20 to 40 years can't have been caused by solar activity," said Dr Piers Forster from Leeds University, a leading contributor to this year's Intergovernmental Panel on Climate Change (IPCC).

Feddema said the warming trend earlier in the century could be attributed to anything from solar activity to El Ninos. But since the mid 1980s he believes data doesn't correlate well with solar activity, but does correlate well with rising CO2 levels.Professor Kristjánsson (quotede) by Science Daily in 2009):

Kristjansson also points out that most research shows no reduction in cosmic rays during the last decades, and that an astronomic explanation of today’s global warming therefore seems very unlikely.Plus here's a new one I just found. Ramanathan is paraphrased in India's Frontline magazine this month as saying:

GCR trends (as seen in Graph 2) underwent monotonic decrease from 1900 to 1970 and then levelled off. The trends do not seem to reflect the large warming trend during 1970-2010.I'll see if he wants to weigh in too. If anyone finds more, feel free to send them to alec-at-rawls-dot-org.

Addendum 3: How little Lockwood's own position is at odds with a solar explanation for late 20th century warming

Suppose the solar activity peak was not in 1985, as Lockwood and Frohlic claim, but several years later, as a straightforward reading of the data suggests. (As documented in Part 1, solar cycle 22, which began in 1986, was more active than cycle 21 by pretty much every measure.) If the solar activity peak shifts five years then instead of predicting peak GMAST by 1995, Mike's temperature response formula says peak GMAST should have occurred by 2000, which is pretty close to when it did occur.

Then there is the lack of warming since 2000, which is fully compatible with a solar explanation for late 20th century warming but is seriously at odds with the CO2 theory. Of course 10 or 15 years is not enough data to prove or disprove either theory, but the episode that is held to require a CO2 explanation is even slighter. The post 1970's warming that is said to be incompatible with a solar explanation didn't show a clear temperature signal until 97 or 98:

By this standard, the subsequent decade of no warming should be seen as even stronger evidence that climate is being driven by the sun, and the quicker the oceans equilibrate, the less room there is for CO2 driven warming to be hidden by ocean damping. Maybe it is time for another update: "Recent samely directed solar climate forcings and global temperature."

Not samely directed trends, because the equilibration mechanism has to be accounted, but a leveling off of surface temperatures is just what a solar-driven climate should display when solar activity plummets. This most recent data is a big deal. It's hard to justify reading so much into the very late 20th century step-up in temperature while ignoring the 21st century's lack of warming.

Addendum 4: Errant dicta from Lockwood and Fröhlich

Professor Lockwood says that his updated paper with Claus Fröhlich ("Recent Samely Directed Trends II") addresses the issue of equilibrium. Here is the second paragraph of their updated paper:

In paper 1 [the original Lockwood and Fröhlich article that I cited in my email], we completely removed the solar cycle variations to reveal the long-term trends. The actual response of the climate system may not have as long a time constant as the procedure adopted in paper 1. Indeed, as discussed in §3 of the present paper and in paper 3, the fact that solar cycle variations are not completely damped out tells us that this is not the whole story. The key unknown is how deeply into the Earth’s oceans a given variation penetrates. If it penetrates sufficiently deeply, the large thermal capacity of the part of the ocean involved would mean that the time constant was extremely long (Wigley & Raper 1990); in such a case, the analysis of long-term trends given in paper 1 would be adequate to fully describe the Earth’s response to solar variations [emphasis added]. However, the recent studies suggest that the sunspot cycle variations in solar forcing, in particular, do not penetrate very deeply into the oceans and so the time constant is smaller; this means that solar cycle variations are not completely suppressed. For example, Douglass et al. (2004) report a response time to solar variations of τ ˂ 1 year and, recently, Schwartz (2007) reports an overall time constant (for all forcings and responses) of τ = 5 ± 1 years. In §3, we show that this uncertainty in the response time constant does not influence our conclusion in paper 1 that the upward trend in global mean air surface temperature cannot be ascribed to solar variations.In the bolded sentence above, Lockwood and Fröhlich are making the same mistake that they made in their first paper. A long time-to-equilibrium would most certainly NOT leave the conclusions of paper 1 unchanged. There it was assumed that as soon as solar activity passed its peak, this passage should tend to create global cooling, which would only be the case if the oceans had already equilibrated to the peak level of forcing. In effect, paper 1 assumes that equilibrium is immediate. If equilibrium instead takes a long time, then equilibrium would not have been reached in 1985, in which case the continued near-peak levels of solar activity over cycle 22 would have continued to cause substantial warming.

Still, this is only what a lawyer would call "dicta": the parts of an opinion that don't carry the weight of the decision, but simply comment on roads not taken. Lockwood and Fröhlich proceed to argue that time-to-equilibrium is short, so their assertions about the case where time-to-equilibrium is long are dicta, but it does raise the question of whether they grasp the implications that time-to-equilibrium actually has for their argument.

Addendum 5: an economist's critique of Schwartz

Start with Schwartz' assumption that forcings are random. Not only does solar activity follow a semi-regular 11 year cycle, but Schwartz only looks at GMAST going back to 1880, a period over which solar activity was semi-steadily rising, as was CO2. Certainly the CO2 increase has been systematic, and solar fluctuations may well be too, so the forcings are not all that random it seems.

I'm not a physicist so I can't say how critical the randomness assumption is to Schwartz' model, but I have a more general problem with the randomness assumption. My background is economics, which is all about not throwing away information, and the randomness assumption throws away information in spades.

By assuming that perturbations are random Schwartz is setting aside everything we can say about the actual time-sequence of forcings. We know, for instance, that the 1991-93 temperature dip coincided with a powerful forcing: the Mount Pinatubo eruption. This allows us to distinguish at least most of this dip as a perturbation rather than a lapse to equilibrium, and this can be done systematically. By estimating how volcanism has affected temperature over the historical record, we can with some effectiveness control for its effects over the entire record. Similarly with other possible forcings. To the extent that their effects are discernable in the temperature record they can be controlled for.

Making use of this partial ability to distinguish perturbations from lapses would give a better picture of the lapse to equilibrium and a better estimate of the lapse rate. Thus even if Schwartz's estimation scheme could be legitimate (maybe given a long enough GMAST record?) the way that it throws away information means that it certainly cannot be efficient. Schwartz's un-economic scheme cannot produce a "best estimate," and probably should not be referenced as such.

Friday, March 04, 2011

Is ATF gun-running operation a ploy to demonize U.S. gun rights?

Has to be, because the rationale that ATF higher-ups gave to the operators makes no sense at all:

Unlike our sweepingly stated Second Amendment, the Mexican constitution only enumerates a highly qualified right to possess arms, and as in my state of California, enumerated gun rights in Mexico are not enforced. Thus Mexico's armed-to-the-teeth criminal gangs could in theory be taken down by gun prosecutions (if Mexico had the wherewithal to do it) by enforcing MEXICAN law. Evidence of violations of AMERICAN law is completely irrelevant, never mind its being tainted by U.S. government complicity.

The only actual reason the ATF would have to let American-sourced guns "walk" into Mexico is to provide facts in support of the Obama administration's ongoing effort to blame Mexican gun violence on American arms dealers, as justification for further infringing the constitutionally protected gun rights of American citizens. They have been putting out trumped up charges of U.S. blame for Mexican drug violence since they got into office, but their weak evidence hasn't stood up to scrutiny. So they decided to manufacture some hard evidence. It is the only logical explanation.

Where do Mexican drug-war weapons actually come from? This from James G. Conway Jr., "a former FBI special agent and program manager of FBI counterterrorism operations in Latin America":

Mexico getting guns from America?

For those leftists who have trouble with this language, no, Mexico is NOT part of America. It is part of North America (the continent) and is part of The Americas (the two continents). Would you tell someone that you are from the continent of America? Are you dumber than a third grader?

Under "countries" for $200 Alex, the case is just as unambiguous. The only country with America in its name is The United States of America. We, and only we, are "America."

Wrong and wrong, pea brains.

UPDATE: Evidence that the ATF did indeed have anti-American skullduggery in mind

The Christian Science Monitor reports that one of ATF's objectives was indeed to prosecute the involved gun dealers:

They DID deny this to Grassley, automatically hanging the dealers out to dry. Thus at least from the moment on of the ATF guns was used by Mexican bandits to murder US border patrol agent Brian Terry, prosecution of the dealers became the ATF's de facto strategy. Add the report from the Christian Science Monitor that the ATF was after the gun dealers all along, and the fact that they actually did try to pin the murder gun on the dealers becomes powerful confirmation.

UPDATE II: AP dirtbag Will Weissert lying by omission

How could ATF and DOJ think they could get away with lying about their involvement in the sale of the rifle that was used to murder Brian Terry? They know that the press wants to omit the same facts that they do. This from AP's Will Weissert:

Weissert goes on to mention the ATF gun running scandal, but instead letting his readers know that the current news story, the murder of agent Terry, is part of it, he just offers the ATF's nonsensical justification as his own analysis:

Remember, ATF is not in any way following the guns they "let walk." Their own claim is that they are just looking to see where the guns end up, which offers no value-added for identifying the gang leaders who are buying them. If authorities can't trace a low level gang member's Croation gun back to the gang leader who bought it then they can't trace an ATF gun back to the gang leader who bought it.

The ATF is providing a blatantly fraudulent excuse for its gun running, and Weissert takes it as his job to make this excuse look as good at possible. That this is not just stupidity on Weissert's part, but is willful deception, is proved by his pretense that Terry was murdered with an illegally sold weapon. He is self-consciously hiding the truth from his readers. Evil trash.

Another Weissert tidbit:

These issues have been much discussed, and Weissert's article shows enough research that he would certainly be aware of them, yet he leaves the crucial context out, and again, the fact that he lies by omission about the Terry murder gun indicates that these other omissions are also intentional.

Crossposted at Flopping Aces and Astute Bloggers.

UPDATE III: direct proof that ATF was using Gunrunner sales to attack Gunrunner-involved gun shops

Just before agent Terry was murdered, ATF had used trace data on Gunrunner gun sales to slander two of its Gunrunner gun shops. That data had been leaked to WAPO by ATF, which leaked similar data to Mexican newspapers.

Agent Dodson and other sources say the gun walking strategy was approved all the way up to the Justice Department. The idea was to see where the guns ended up, build a big case and take down a cartel. And it was all kept secret from Mexico.How could it possibly matter where criminal Mexican drug gangs get their illegal weapons? ALL their guns are illegal under Mexican law. If the illegality of their weapons could bring them down, there would be no need for their guns to come from America.

Unlike our sweepingly stated Second Amendment, the Mexican constitution only enumerates a highly qualified right to possess arms, and as in my state of California, enumerated gun rights in Mexico are not enforced. Thus Mexico's armed-to-the-teeth criminal gangs could in theory be taken down by gun prosecutions (if Mexico had the wherewithal to do it) by enforcing MEXICAN law. Evidence of violations of AMERICAN law is completely irrelevant, never mind its being tainted by U.S. government complicity.

The only actual reason the ATF would have to let American-sourced guns "walk" into Mexico is to provide facts in support of the Obama administration's ongoing effort to blame Mexican gun violence on American arms dealers, as justification for further infringing the constitutionally protected gun rights of American citizens. They have been putting out trumped up charges of U.S. blame for Mexican drug violence since they got into office, but their weak evidence hasn't stood up to scrutiny. So they decided to manufacture some hard evidence. It is the only logical explanation.

Where do Mexican drug-war weapons actually come from? This from James G. Conway Jr., "a former FBI special agent and program manager of FBI counterterrorism operations in Latin America":

On the surface, a cursory review of the high-powered military-grade seized weaponry -- grenades, RPGs, .50-caliber machine guns -- clearly indicates this weaponry is not purchased at gun shows in the United States and can't even be found commercially available to consumers in the United States. The vast majority of the weaponry used in this "drug war" comes into Mexico through the black market and the wide-open, porous southern border of Mexico and Guatemala. This black market emanates from a variety of global gunrunners with ties to Russian organized crime and terror groups with a firm foothold in Latin America such as the FARC, as well as to factions leftover from the civil wars in Central America during the 1980's. Chinese arms traffickers have managed to disperse weapons, particularly grenades, on the streets of Mexico. In addition, many of the Mexican military-issued, Belgian-made automatic M-16s currently in the hands of traffickers have been pilfered from military depots and the thousands who have deserted the Mexican army in recent years.The exception would be those few thousands of semi-automatic M-16's and AK-47's let into Mexico by the ATF, along with however many Barret-50's. Yeah, those are serious weapons. Obama's minions definitely added to the murder spree, all in pursuit of their unconstitutional war against gun rights here at home.

Mexico getting guns from America?

For those leftists who have trouble with this language, no, Mexico is NOT part of America. It is part of North America (the continent) and is part of The Americas (the two continents). Would you tell someone that you are from the continent of America? Are you dumber than a third grader?

Under "countries" for $200 Alex, the case is just as unambiguous. The only country with America in its name is The United States of America. We, and only we, are "America."

Wrong and wrong, pea brains.

UPDATE: Evidence that the ATF did indeed have anti-American skullduggery in mind

The Christian Science Monitor reports that one of ATF's objectives was indeed to prosecute the involved gun dealers:

Under the ATF’s Operation Fast and Furious, gun smugglers were allowed to buy the weapons in the hopes the US agents could track the firearms to the Mexican drug runners and other border-area criminal gangs as well as build cases against the gun dealers themselves.But it is known that the dealers were operating under instruction from the ATF:

Dodson said some of the cooperating gun dealers who sold weapons to the suspects at ATF’s behest initially had concerns and wanted to end their sales. One even asked whether the dealers might have a legal liability, but was assured by federal prosecutors they would be protected, he said.Was ATF planning on double-crossing the dealers? That fits with ATF's willingness to deny involvement, even to the point of lying to Senator Grassley about it. This would be natural if they were planning from the beginning on lying in court. And notice how easily the videotaped sales (at the 1 minute mark here) could be used as evidence of lawbreaking by the dealers, if the ATF just denied that the dealers were operating under their instructions.

The case logs show ATF supervisors and U.S. attorney’s office lawyers met with some of the gun dealers to discuss their “role” in the case, in one instance as far back as December 2009.

They DID deny this to Grassley, automatically hanging the dealers out to dry. Thus at least from the moment on of the ATF guns was used by Mexican bandits to murder US border patrol agent Brian Terry, prosecution of the dealers became the ATF's de facto strategy. Add the report from the Christian Science Monitor that the ATF was after the gun dealers all along, and the fact that they actually did try to pin the murder gun on the dealers becomes powerful confirmation.

UPDATE II: AP dirtbag Will Weissert lying by omission

How could ATF and DOJ think they could get away with lying about their involvement in the sale of the rifle that was used to murder Brian Terry? They know that the press wants to omit the same facts that they do. This from AP's Will Weissert:

BROWNSVILLE, Texas -- Federal agents are barely able to slow the river of American guns flowing into Mexico. In two years, a new effort to increase inspections of travelers crossing the border has netted just 386 guns - an almost infinitesimal amount given that an estimated 2,000 slip across each day.Never does the article note that the murder gun was sold to Mexican criminals on the instructions of the ATF. Instead, the article uses this consequence of ATF perfidy as an excuse to retail fabricated propaganda from Bill Clinton's anti-gun zealots. He does note that their report "did not include information on how [the 2,000 illegal guns a day] figure was reached," but that does not stop him from touting it as "the last comprehensive estimate on the subject." How does he know the study was comprehensive if they never revealed where their estimates came from? Is the study comprehensive if they pulled the numbers out of thin air?

The problem came into sharp focus again last month when a U.S. Immigration and Customs Enforcement agent was killed on a northern Mexican highway with a gun that was purchased in a town outside Fort Worth, Texas.

Weissert goes on to mention the ATF gun running scandal, but instead letting his readers know that the current news story, the murder of agent Terry, is part of it, he just offers the ATF's nonsensical justification as his own analysis:

The ATF's work on the border highlights the tension between short-term operations aimed at arresting low-level straw buyers - legal U.S. residents with clean records who buy weapons - and long-term ones designed to identify who is directing the gun buys.Since he is presenting this as his own understanding, he ought to note that if the Mexican government wants to identify who is directing illegal gun buys, they have plenty of cases to look at already. Under the guise of showing the magnitude of the problem that ATF is trying to solve, Weissert even provides some relevant figures:

From September 2009 to July 31 of last year alone, the Mexican government seized more than 32,000 illegal weapons, even though purchasing guns in Mexico requires permission from the country's defense department, and even then buyers are limited to pistols of .38-caliber or less.Mexican authorities already know where the illegal guns are going because they are finding large numbers of them on a daily basis. As for their ability to prosecute gang leaders for illegal weapons that are confiscated from low level gang members, that problem is the same whether the guns come from the ATF or from Croatia.

Remember, ATF is not in any way following the guns they "let walk." Their own claim is that they are just looking to see where the guns end up, which offers no value-added for identifying the gang leaders who are buying them. If authorities can't trace a low level gang member's Croation gun back to the gang leader who bought it then they can't trace an ATF gun back to the gang leader who bought it.

The ATF is providing a blatantly fraudulent excuse for its gun running, and Weissert takes it as his job to make this excuse look as good at possible. That this is not just stupidity on Weissert's part, but is willful deception, is proved by his pretense that Terry was murdered with an illegally sold weapon. He is self-consciously hiding the truth from his readers. Evil trash.

Another Weissert tidbit:

Many guns used to kill in Mexico never have their origins traced. Still, ATF has long estimated that of the weapons discovered at Mexican crime scenes which authorities do choose to trace, nearly 90 percent are eventually found to have been purchased in the U.S.Duh. Guns legal for sale in the United States are identifiable by their import stamps, serial number ranges, and other markings. Those are the guns that are examined for U.S. gun trace data. Even then, the 90% figure only refers to those guns that are successfully traced. Of course the guns that the U.S. actually has trace data for are almost entirely U.S. guns. The actual percentage of Mexican crime guns that have been traced to America is 12.

These issues have been much discussed, and Weissert's article shows enough research that he would certainly be aware of them, yet he leaves the crucial context out, and again, the fact that he lies by omission about the Terry murder gun indicates that these other omissions are also intentional.

Crossposted at Flopping Aces and Astute Bloggers.

UPDATE III: direct proof that ATF was using Gunrunner sales to attack Gunrunner-involved gun shops

Just before agent Terry was murdered, ATF had used trace data on Gunrunner gun sales to slander two of its Gunrunner gun shops. That data had been leaked to WAPO by ATF, which leaked similar data to Mexican newspapers.